PyTorch (3) Linear Regression

まずは基本ということで線形回帰(Linear Regression)から。人工データとBoston house price datasetを試してみた。まだ簡単なのでCPUモードのみ。GPU対応はまた今度。

人工データセット

import torch import torch.nn as nn import numpy as np import matplotlib.pyplot as plt # hyper parameters input_size = 1 output_size = 1 num_epochs = 60 learning_rate = 0.001

データセット作成

# toy dataset # 15 samples, 1 features x_train = np.array([3.3, 4.4, 5.5, 6.71, 6.93, 4.168, 9.779, 6.182, 7.59, 2.167, 7.042, 10.791, 5.313, 7.997, 3.1], dtype=np.float32) y_train = np.array([1.7, 2.76, 2.09, 3.19, 1.694, 1.573, 3.366, 2.596, 2.53, 1.221, 2.827, 3.465, 1.65, 2.904, 1.3], dtype=np.float32) x_train = x_train.reshape(15, 1) y_train = y_train.reshape(15, 1)

nn.Linear への入力は (N,∗,in_features) であるため reshape が必要。* には任意の次元を追加できるが今回は1次元データなのでない。

モデルを構築

# linear regression model class LinearRegression(nn.Module): def __init__(self, input_size, output_size): super(LinearRegression, self).__init__() self.linear = nn.Linear(input_size, output_size) def forward(self, x): out = self.linear(x) return out model = LinearRegression(input_size, output_size)

PyTorchのモデルはChainerと似ている。

- nn.Module を継承したクラスを作成

__init__()に層オブジェクトを定義forward()に順方向の処理

LossとOptimizer

# loss and optimizer

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

- 線形回帰なので平均二乗誤差(mean squared error)

- OptimizerはもっともシンプルなStochastic Gradient Descentを指定

訓練ループ

# train the model for epoch in range(num_epochs): inputs = torch.from_numpy(x_train) targets = torch.from_numpy(y_train) optimizer.zero_grad() outputs = model(inputs) loss = criterion(outputs, targets) loss.backward() optimizer.step() if (epoch + 1) % 10 == 0: print('Epoch [%d/%d], Loss: %.4f' % (epoch + 1, num_epochs, loss.item())) # save the model torch.save(model.state_dict(), 'model.pkl')

- 各エポックでは

zero_grad()で勾配をクリアすること! - パラメータは

optimizer.step()で更新される - 10エポックごとに訓練lossを表示する

- 最後にモデルを保存

訓練ループはもっと洗練させないと実用的ではないな。

実行結果

Epoch [10/100], Loss: 1.4917 Epoch [20/100], Loss: 0.3877 Epoch [30/100], Loss: 0.2065 Epoch [40/100], Loss: 0.1767 Epoch [50/100], Loss: 0.1719 Epoch [60/100], Loss: 0.1710 Epoch [70/100], Loss: 0.1709 Epoch [80/100], Loss: 0.1709 Epoch [90/100], Loss: 0.1709 Epoch [100/100], Loss: 0.1708

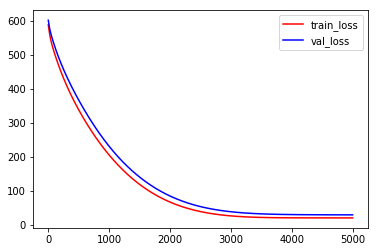

最後に訓練データと予測した直線を描画してみよう。

# plot the graph predicted = model(torch.from_numpy(x_train)).detach().numpy() plt.plot(x_train, y_train, 'ro', label='Original data') plt.plot(x_train, predicted, label='Fitted line') plt.legend() plt.show()

- 勾配(grad)を持っているTensorはそのままnumpy arrayに変換できない。

detach()が必要

RuntimeError: Can't call numpy() on Variable that requires grad. Use var.detach().numpy() instead.

Boston house price dataset

次は家の価格のデータセットもやってみよう。13個の特徴量をもとに家の値段を予測する。入力層が13ユニットで出力層が1ユニットの線形回帰のネットワークを書いた。

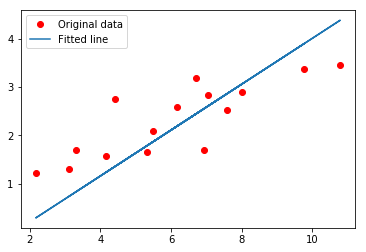

import torch import torch.nn as nn import numpy as np import matplotlib.pyplot as plt from sklearn.datasets import load_boston from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler import matplotlib.pyplot as plt # hyper parameters input_size = 13 output_size = 1 num_epochs = 5000 learning_rate = 0.01 boston = load_boston() X = boston.data y = boston.target X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.33, random_state = 5) # データの標準化 scaler = StandardScaler() X_train = scaler.fit_transform(X_train) X_test = scaler.transform(X_test) y_train = np.expand_dims(y_train, axis=1) y_test = np.expand_dims(y_test, axis=1) # linear regression model class LinearRegression(nn.Module): def __init__(self, input_size, output_size): super(LinearRegression, self).__init__() self.linear = nn.Linear(input_size, output_size) def forward(self, x): out = self.linear(x) return out model = LinearRegression(input_size, output_size) # loss and optimizer criterion = nn.MSELoss() optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate) def train(X_train, y_train): inputs = torch.from_numpy(X_train).float() targets = torch.from_numpy(y_train).float() optimizer.zero_grad() outputs = model(inputs) loss = criterion(outputs, targets) loss.backward() optimizer.step() return loss.item() def valid(X_test, y_test): inputs = torch.from_numpy(X_test).float() targets = torch.from_numpy(y_test).float() outputs = model(inputs) val_loss = criterion(outputs, targets) return val_loss.item() # train the model loss_list = [] val_loss_list = [] for epoch in range(num_epochs): # data shuffle perm = np.arange(X_train.shape[0]) np.random.shuffle(perm) X_train = X_train[perm] y_train = y_train[perm] loss = train(X_train, y_train) val_loss = valid(X_test, y_test) if epoch % 200 == 0: print('epoch %d, loss: %.4f val_loss: %.4f' % (epoch, loss, val_loss)) loss_list.append(loss) val_loss_list.append(val_loss) # plot learning curve plt.plot(range(num_epochs), loss_list, 'r-', label='train_loss') plt.plot(range(num_epochs), val_loss_list, 'b-', label='val_loss') plt.legend()

- データを平均0、標準偏差1に標準化すると結果が安定する

- テストデータには訓練データでfitしたscalerを適用する

train()とvalid()をそれぞれ関数として独立させた。このようにまとめると訓練ループがすっきりするのでよいかも。- 200エポックごとにログを出力した

実験結果

epoch 0, loss: 582.9910 val_loss: 594.2480 epoch 200, loss: 453.9804 val_loss: 479.6869 epoch 400, loss: 373.9557 val_loss: 402.7326 epoch 600, loss: 308.8472 val_loss: 337.8119 epoch 800, loss: 253.5647 val_loss: 281.1577 epoch 1000, loss: 206.5357 val_loss: 232.3899 epoch 1200, loss: 166.8685 val_loss: 191.0127 epoch 1400, loss: 133.7838 val_loss: 156.2874 epoch 1600, loss: 106.5488 val_loss: 127.4714 epoch 1800, loss: 84.4694 val_loss: 103.8716 epoch 2000, loss: 66.8853 val_loss: 84.8388 epoch 2200, loss: 53.1687 val_loss: 69.7598 epoch 2400, loss: 42.7244 val_loss: 58.0542 epoch 2600, loss: 34.9919 val_loss: 49.1742 epoch 2800, loss: 29.4506 val_loss: 42.6082 epoch 3000, loss: 25.6266 val_loss: 37.8878 epoch 3200, loss: 23.0996 val_loss: 34.5939 epoch 3400, loss: 21.5107 val_loss: 32.3652 epoch 3600, loss: 20.5666 val_loss: 30.9023 epoch 3800, loss: 20.0406 val_loss: 29.9688 epoch 4000, loss: 19.7679 val_loss: 29.3874 epoch 4200, loss: 19.6375 val_loss: 29.0325 epoch 4400, loss: 19.5806 val_loss: 28.8196 epoch 4600, loss: 19.5581 val_loss: 28.6940 epoch 4800, loss: 19.5501 val_loss: 28.6216